Read Also

Empowering Educators with Faith, Excellence and Purpose

Amelia Febriani, Head of Training and Development, Mawar Sharon Christian School Official

Leading with AI: From Ethics to Enterprise Impact

Adrienne Heinrich, AI COE Head, Senior Vice President, Union Bank of the Philippines

Done Today Beats Perfect Tomorrow: The New IT Advantage

Samuel Budianto, Head of Information Technology,Time International

The Shift from Cybersecurity to Product Security: A Business Imperative

Peter Wong, Head of Information Security and Compliance, Apac, Edenred

Advancing Retail through E-Commerce, Cloud and Cybersecurity

John Gaspar Antonio, CIO/Vice President for Information Technology & E-Commerce / Data Protection Officer, Metro Retail Stores Group

Transforming Risk Management into Strategic Business Advantage

Jeremy Leong, Chief Risk Officer and Head of Risk and Compliance, Taishin International Bank – Brisbane Branch

Designing Future-Ready, People-Centered Workplaces

Peter Andrew, Executive Director, Head of Workplace Strategy, Advisory Services, Asia Pacific, CBRE

Shaping Customer Experience Through Smarter Logistics

Pascal Kouknas, General Manager of Logistics, Shipping, and Information Technology, Freedom Australia

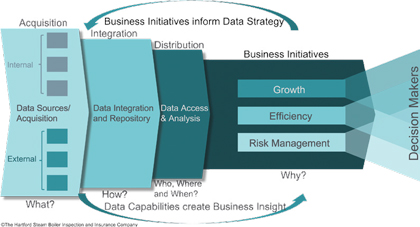

To understand what data we can bring together, we have to go back upstream to the Acquisition layer. At this layer, an inventory needs to be taken of what you have and have access to (What).

What is accessible (free vs. purchased), what is the value and accuracy of the data, where it stored (cloud vs. on-prem), are just some of the questions that will need to be answered about what data you have and whether it is worth bringing together.

Another area of technology that is helping this situation is virtualization, which is the capability to connect to data sources, combine different data types and consume the data through multiple platform/delivery systems. We have found that virtualization is a key component of being able to continually contribute value to the organization while you are executing on the enterprise data strategy.

As we continue down this journey of evolving technology capabilities, we know that there will always be new tools/ capabilities available. My suggestion is to find a suite of tools that already have some level of integration across your data strategy components and stick with that toolset (at least to get your ROI out of the program).

This will save you time in trying to integrate them yourselves and keep you from veering off the path.

Additionally, I suggest establishing a cloud strategy and creating policies to reuse as you expand. For organizations with immature cloud efforts, you will end up learning the hard way if you don’t put some guidelines together to keep everyone aligned. Finally, find yourself a team of data warehouse experts interested in change and investing in new personal capabilities.

They are out there, and they are the lifeblood of the evolving data organization. They know what data can be trusted and where it can be found.

If you don’t start to move away from data warehousing and take advantage of the improved technology, your organization will be stuck in the past. It will be surpassed by your competition’s capability to access and use data. And your organization could end up “Same as it ever was…” or worse.

To understand what data we can bring together, we have to go back upstream to the Acquisition layer. At this layer, an inventory needs to be taken of what you have and have access to (What).

What is accessible (free vs. purchased), what is the value and accuracy of the data, where it stored (cloud vs. on-prem), are just some of the questions that will need to be answered about what data you have and whether it is worth bringing together.

Another area of technology that is helping this situation is virtualization, which is the capability to connect to data sources, combine different data types and consume the data through multiple platform/delivery systems. We have found that virtualization is a key component of being able to continually contribute value to the organization while you are executing on the enterprise data strategy.

As we continue down this journey of evolving technology capabilities, we know that there will always be new tools/ capabilities available. My suggestion is to find a suite of tools that already have some level of integration across your data strategy components and stick with that toolset (at least to get your ROI out of the program).

This will save you time in trying to integrate them yourselves and keep you from veering off the path.

Additionally, I suggest establishing a cloud strategy and creating policies to reuse as you expand. For organizations with immature cloud efforts, you will end up learning the hard way if you don’t put some guidelines together to keep everyone aligned. Finally, find yourself a team of data warehouse experts interested in change and investing in new personal capabilities.

They are out there, and they are the lifeblood of the evolving data organization. They know what data can be trusted and where it can be found.

If you don’t start to move away from data warehousing and take advantage of the improved technology, your organization will be stuck in the past. It will be surpassed by your competition’s capability to access and use data. And your organization could end up “Same as it ever was…” or worse.